Converting PDF to HTML in Python is a popular task for web applications, enabling dynamic content display. Tools like BeautifulSoup and pdfplumber simplify extraction and conversion processes, ensuring accurate results.

Overview of PDF and HTML Conversion

Converting PDF to HTML involves transforming static, formatted documents into dynamic web content. PDFs are designed for consistent layout across devices, while HTML offers interactivity and web compatibility. The process extracts text, images, and formatting from PDFs and recreates them in HTML structure. Challenges include preserving layout accuracy and formatting details. Tools like pdfplumber and BeautifulSoup simplify extraction and conversion, enabling developers to maintain document integrity; This conversion is crucial for web applications requiring searchable, interactive, or responsive content. It bridges the gap between offline documents and online accessibility, enhancing user engagement and functionality.

Importance of Python in PDF to HTML Conversion

Python’s simplicity and extensive libraries make it ideal for PDF to HTML conversion. Tools like PyPDF2, pdfplumber, and BeautifulSoup provide efficient ways to extract and convert content; Its cross-platform compatibility ensures consistent results across environments. Python’s flexibility allows handling complex layouts and custom conversions, while its vast community offers robust support and resources. Additionally, Python’s scalability enables processing large documents or multiple files efficiently. These features make Python a preferred choice for developers needing accurate and reliable PDF-to-HTML solutions, ensuring high-quality output while maintaining document structure and formatting.

Tools and Libraries for PDF to HTML Conversion

Popular tools like PyPDF2, pdfplumber, and BeautifulSoup simplify PDF-to-HTML conversion. These libraries enable text extraction, layout analysis, and HTML parsing, making the process efficient and developer-friendly.

PyPDF2: Features and Capabilities

PyPDF2 is a versatile Python library for PDF manipulation. It allows reading, writing, and merging PDF files. Key features include text extraction, page splitting, and encryption. However, it lacks layout analysis, making it less suitable for complex conversions. Developers often combine it with other tools for enhanced functionality.

pdfplumber: Extracting Text and Layout Information

pdfplumber excels in extracting detailed text and layout data from PDFs. It identifies elements like tables, images, and columns, preserving structural information. This makes it ideal for converting PDFs with complex layouts into HTML, ensuring content remains organized and formatted correctly. Its ability to handle multi-column texts and detect fonts enhances accuracy, making it a preferred choice for precise PDF-to-HTML conversions.

BeautifulSoup4: Parsing and Converting PDF Content

BeautifulSoup4 is a powerful library for parsing HTML and XML documents, but it also plays a role in PDF-to-HTML conversion when used alongside PDF extraction tools. While it doesn’t directly read PDFs, it excels at structuring and cleaning extracted content. By integrating it with libraries like pdfplumber or PyPDF2, you can parse extracted text and layout data, then convert it into well-structured HTML. It’s particularly useful for organizing text, handling tables, and ensuring proper formatting. However, it doesn’t handle image extraction or complex layouts, making it best suited for text-centric PDFs.

Step-by-Step Guide to Converting PDF to HTML

Convert PDF to HTML in Python by installing libraries like pdfplumber and BeautifulSoup4. Open the PDF, extract text and layout data, then structure and convert it into HTML format. This process ensures content is web-ready while maintaining structure and readability, making it ideal for web applications and dynamic content display.

Installing Required Libraries

To begin converting PDF to HTML in Python, install the necessary libraries using pip. First, install PyPDF2 for handling PDF files:

bash

pip install PyPDF2

Next, install pdfplumber for advanced text and layout extraction:

bash

pip install pdfplumber

Finally, install BeautifulSoup4 for parsing and structuring content:

bash

pip install beautifulsoup4

These libraries provide essential tools for reading, extracting, and converting PDF content into HTML format, ensuring a smooth conversion process.

Opening and Reading PDF Files

To open and read PDF files in Python, use libraries like PyPDF2 or pdfplumber. Install them via pip:

pip install PyPDF2 pdfplumber

Open a PDF file in read-binary mode:

with open(‘example.pdf’, ‘rb’) as file:

Use PyPDF2 to create a reader object:

reader = PyPDF2.PdfReader(file)

Extract text from each page:

for page in reader.pages:

print(page.get_text)

For more detailed text and layout extraction, use pdfplumber:

with pdfplumber.open(‘example.pdf’) as pdf:

for page in pdf.pages:

text = page.extract_text

# Extract layout information

for block in page.objects[‘char’]:

print(block)

This approach allows you to read and extract content efficiently, preparing it for conversion to HTML while preserving structure and layout details.

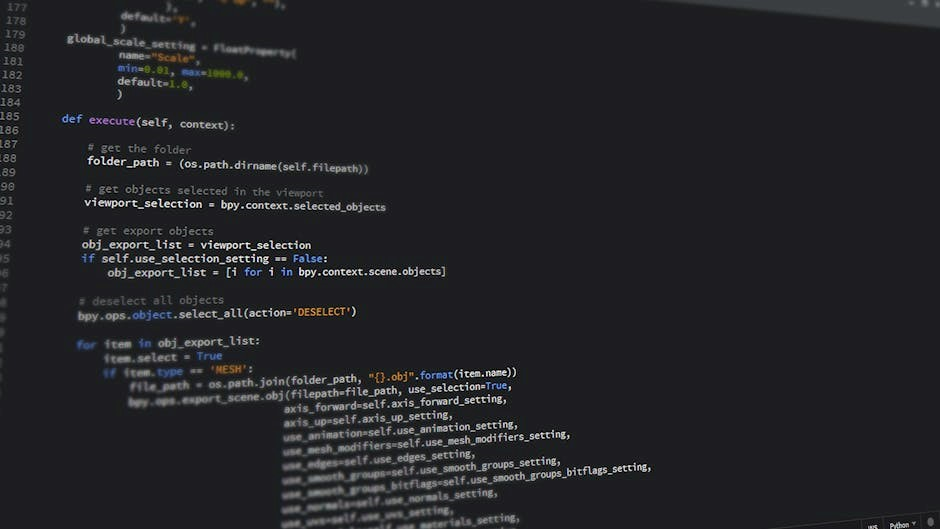

Extracting Text and Layout Information

Extracting text and layout information from PDFs is crucial for accurate HTML conversion. Libraries like pdfplumber and PyMuPDF offer robust tools for this purpose. Use pdfplumber to extract text while preserving layout:

with pdfplumber.open(“example.pdf”) as pdf:

for page in pdf.pages:

text = page.extract_text

# Extract layout elements like tables or images

For advanced layout analysis, access page.objects to retrieve detailed information about text blocks, tables, and images. This ensures that the structure of the PDF is maintained during conversion to HTML, enabling precise rendering of the original document’s format and content.

Converting Extracted Data to HTML

Once text and layout information are extracted, the next step is to convert this data into HTML format. Use Python’s BeautifulSoup library to create and structure HTML elements dynamically. For example:

from bs4 import BeautifulSoup

html_content = BeautifulSoup(”, ‘html.parser’)

p_tag = html_content.new_tag(‘p’)

p_tag.string = extracted_text

Add styling or classes as needed to maintain the PDF’s visual hierarchy. Finally, write the HTML content to a file:

with open(‘output.html’, ‘w’) as f:

f.write(str(html_content))

This approach ensures that the extracted data is accurately transformed into a structured HTML document, ready for web integration.

Handling Complex PDF Layouts

Complex PDF layouts, such as multi-column text and embedded images, require careful processing to maintain structure in HTML. Use libraries like pdfplumber to extract layout information, including text positions and image coordinates. For multi-column layouts, analyze text bounding boxes to determine column breaks and replicate them using HTML and CSS. Images can be embedded directly into the HTML while preserving their original positions. Ensure fonts and formatting are mirrored by applying appropriate CSS styles. Custom scripting may be necessary to handle intricate layouts accurately, ensuring the final HTML resembles the original PDF structure as closely as possible. This step is crucial for preserving the visual integrity of the document.

Handling Complex PDFs

Complex PDFs with multi-column layouts, images, or intricate formatting require advanced processing. Use Python libraries like pdfplumber to extract layout information and handle these challenges effectively.

Dealing with Multi-Column Layouts

Multi-column layouts in PDFs can be challenging due to varying text alignments and structural complexities. Use pdfplumber to extract column-wise text and identify layout patterns. For tables, tabula can help extract structured data. When converting, ensure proper text alignment and spacing in HTML. Libraries like BeautifulSoup can assist in restructuring content for web display. While automated tools simplify the process, manual adjustments may be needed for precise formatting. Handling multi-column layouts requires careful extraction and restructuring to maintain readability and visual integrity in the final HTML output.

Extracting Images and Embedded Objects

Extracting images and embedded objects from PDFs is essential for preserving visual content in HTML. Libraries like pdfplumber and PyMuPDF enable image extraction by identifying embedded files; These images can then be saved and referenced in HTML using `` tags. Embedded objects, such as fonts or annotations, may require additional processing. Tools like reportlab or pdfminer can help handle fonts, ensuring proper rendering. When converting, ensure images are optimized for web use and embedded objects are correctly formatted to maintain the document’s integrity and visual appeal in the final HTML output;

Preserving Fonts and Formatting

Preserving fonts and formatting during PDF to HTML conversion is crucial for maintaining document integrity. Tools like pdfplumber and PyPDF2 help extract text while retaining formatting details. HTML and CSS can replicate the original layout, with fonts embedded or referenced via `

Best Practices for PDF to HTML Conversion

Adopt consistent formatting, validate HTML output, and test cross-browser compatibility. Use CSS for styling and ensure accessibility standards are met for optimal web performance and readability.

Ensuring Accuracy in Text Extraction

Accurate text extraction is crucial for reliable PDF-to-HTML conversion. Use libraries like pdfplumber or PyPDF2 to extract text while preserving layout information. These tools help maintain the structural integrity of the document, reducing errors. For complex layouts, manually review and clean the extracted text to ensure accuracy. Regularly update libraries to leverage improvements in text recognition. Additionally, validate extracted content against the original PDF to identify discrepancies. Post-processing techniques, such as removing unwanted characters or formatting, can further enhance precision. Combining these methods ensures high-quality text extraction, essential for seamless HTML conversion.

Maintaining Document Structure

Maintaining document structure is vital for accurate PDF-to-HTML conversion. Use libraries like pdfplumber to extract text and layout information, preserving headings, paragraphs, and tables. Map PDF elements to appropriate HTML tags, such as <h1> for headings or <ul> for lists. Ensure nested elements like lists and tables are correctly structured in HTML. While libraries automate much of this, manual adjustments may be needed for complex layouts. By maintaining the original document’s hierarchy, the HTML output remains visually consistent and semantically accurate, ensuring a seamless user experience. This step is crucial for both readability and web accessibility.

Optimizing HTML Output for Web Use

Optimizing HTML output ensures your converted content is web-ready. Clean up the HTML by removing redundant tags and ensuring proper indentation. Use tools like BeautifulSoup to structure and validate the markup. Ensure the HTML adheres to web standards for cross-browser compatibility. Add alt text to images and use semantic tags for better accessibility. Minify HTML to reduce file size and improve load times. Finally, test the output across different browsers to ensure consistency. These optimizations ensure your HTML is not only functional but also enhances user experience and performance online.

Common Errors and Solutions

Common errors include encoding issues, corrupted PDFs, and memory constraints. Solutions involve using reliable libraries like pdfplumber and ensuring proper file handling and encoding detection.

Handling Corrupted PDF Files

Corrupted PDF files can cause conversion issues. Use error handling with try-except blocks to catch exceptions when opening or reading PDFs. Libraries like PyPDF2 and pdfplumber may raise specific errors, such as PdfReadError or PDFTextExtractionNotAllowed . To recover, attempt to repair the PDF using tools like Ghostscript or validate the file structure before processing. Ensure proper exception logging and user feedback mechanisms. Additionally, consider using alternative libraries or modes if initial attempts fail, ensuring the system remains stable and informative when corruption is detected.

Resolving Encoding Issues

Encoding issues can arise when converting PDF to HTML, especially with non-English text. Specify the correct encoding when opening PDF files using libraries like pdfplumber or PyPDF2. Use ‘utf-8’ for universal compatibility. For tricky cases, employ encoding detection libraries like chardet or polyglot to identify the text encoding automatically. Ensure proper error handling and fallback mechanisms in case of undetectable encodings. Additionally, verify that the PDF itself uses embedded fonts correctly, as missing fonts can lead to encoding mismatches. Include try-except blocks to catch and manage encoding-related exceptions gracefully, ensuring smooth and accurate text extraction and conversion.

Managing Memory Constraints

When converting large PDFs to HTML, memory constraints can arise. To mitigate this, process PDFs in chunks or pages rather than loading the entire file at once. Use libraries like PyPDF2 or pdfplumber, which support incremental reading. Optimize by extracting text and layout information page-by-page, converting each to HTML sequentially. Avoid storing the entire PDF in memory; instead, write HTML output as you process each section. For extremely large files, consider using generators or iterators to yield content progressively. Implement error handling to manage memory overflow gracefully, ensuring your script remains efficient and robust even with limited resources.

Converting PDF to HTML in Python is efficient with tools like PyPDF2 and pdfplumber. Future improvements may include enhanced AI-driven layout detection and improved library support for complex PDFs.

The process involves extracting text and layout data from PDF files using libraries like PyPDF2 or pdfplumber. Once extracted, the data is parsed and converted into HTML format. Tools like BeautifulSoup can further refine the output, ensuring proper structure. The final HTML retains the original content’s integrity, making it suitable for web use. Best practices include ensuring text extraction accuracy and maintaining the document’s structure. Testing with various PDF formats is recommended to handle complexities. While the process is straightforward, advanced PDFs may require additional steps for optimal results.

Future Enhancements in PDF to HTML Conversion

Future advancements in PDF to HTML conversion aim to improve accuracy and efficiency. Enhanced layout retention and better handling of complex PDF structures are expected. Integration of AI and machine learning could automate formatting and improve text extraction. Support for embedded multimedia and advanced typography is also anticipated. Developers are exploring faster processing speeds and reduced memory usage. These improvements will make PDF-to-HTML conversion more reliable and scalable for large-scale applications, ensuring seamless integration into web-based systems.